TensorFlow is an open source, high performance, library for numerical computation.

It's not just about machine learning. It's about any numeric computation. Infact , people have used TensorFlow for all kinds of GPU computing. For example, you can use TensorFlow to solve partial differential equations. These are useful in domains like fluid dynamics. Tensorflow as a numeric program library is appealing, because you can write your competition code in a high level language, Python for example, and have it be executed in a fast way. The way TensorFlow works is that you create a directed acyclic graph, a DAG. To represent your computation. In this schematic, the nodes represent mathematical operations. Things like adding, subtracting, multiplying etcetera. Also more complex functions. Here for example you see soft max matrix multiplication. These are all mathematical operations that are part of the directed acyclic graph, the DAG. Connecting the nodes in the DAG are the edges, the input and the output of mathematical operations. The edges represent arrays of data. Essentially the result of computing across entropy is one of the three inputs to the bias add operation and the output of the bias and operation is sent along the matrix multiplication operation, matmul in the diagram. The other input to matmul, you need to input your matrix multiplication. The other input is a variable, the weight. So where does the name TensorFlow come from. In math a simple number like three or five is called a scalar. A vector is a one dimensional array of such numbers. In physics a vector is something magnitude and direction, but in computer science we've used vector to mean 1D arrays. A two-dimensional array is a matrix, but the three-dimensional array, we just call it a 3D tensor. So scalar, vector, matrix 3D tensor, 4D tensor etcetera. A tensor is an n dimensional array of data. So your data in TensorFlow, they are tensors. They flow through the directed acyclic graph, hence TensorFlow.

It's not just about machine learning. It's about any numeric computation. Infact , people have used TensorFlow for all kinds of GPU computing. For example, you can use TensorFlow to solve partial differential equations. These are useful in domains like fluid dynamics. Tensorflow as a numeric program library is appealing, because you can write your competition code in a high level language, Python for example, and have it be executed in a fast way. The way TensorFlow works is that you create a directed acyclic graph, a DAG. To represent your computation. In this schematic, the nodes represent mathematical operations. Things like adding, subtracting, multiplying etcetera. Also more complex functions. Here for example you see soft max matrix multiplication. These are all mathematical operations that are part of the directed acyclic graph, the DAG. Connecting the nodes in the DAG are the edges, the input and the output of mathematical operations. The edges represent arrays of data. Essentially the result of computing across entropy is one of the three inputs to the bias add operation and the output of the bias and operation is sent along the matrix multiplication operation, matmul in the diagram. The other input to matmul, you need to input your matrix multiplication. The other input is a variable, the weight. So where does the name TensorFlow come from. In math a simple number like three or five is called a scalar. A vector is a one dimensional array of such numbers. In physics a vector is something magnitude and direction, but in computer science we've used vector to mean 1D arrays. A two-dimensional array is a matrix, but the three-dimensional array, we just call it a 3D tensor. So scalar, vector, matrix 3D tensor, 4D tensor etcetera. A tensor is an n dimensional array of data. So your data in TensorFlow, they are tensors. They flow through the directed acyclic graph, hence TensorFlow.

Tensorflow Lite

Tensorflow lite provides on-device inference of ML models on mobile devices and is available for a variety of hardware.

Tensorflow API Hierarchy

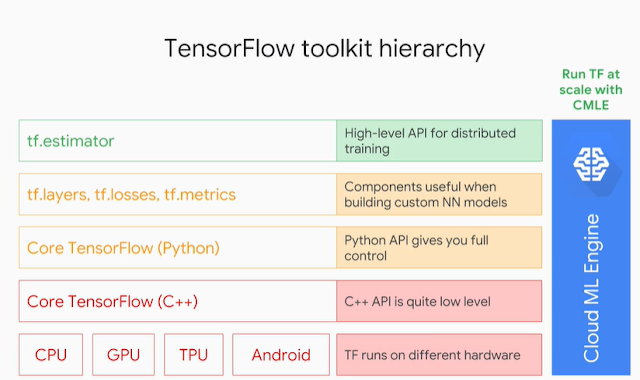

Like most software libraries, TensorFlow has in it a number of abstraction layers,

the lowest level of abstraction, is a layer that's implemented to target different hardware platforms. Unless your company makes hardware, it's unlikely that you will do much at this level. The next level, is a TensorFlow c++ API. This is how you can write a custom TensorFlow app, You will implement a function you want in C++, and register it as a TensorFlow operation. See the TensorFlow documentation on extending an app. TensorFlow will then give you a python wrapper that you can use, just like you would an existing function. In this specialization though, we'll assume that you're not an ML researcher, and so you're not having to do this, but if you ever need to implement your own custom app, you would do it in C++, and it's not too hard. TensorFlow, is extensible that way. The core Python API the next level, is what contains much of the numeric processing code, add, subtract, divide, matrix multiply etc. creating variables, creating tensors, getting the shape, all the dimensions of a tensor, all that core basic numeric processing stuff, that's all in the python API. Then, there are a set of Python modules that have high level representation of useful neural network components, for example, a way to create a new layer of hidden neurons, with a real activation function. It's in tf layers, a way to compute the root mean square error and data as it comes in, tf metrics, a way to compute cross entropy with Logic's. This is a common last measurement classification problems, cross entropy with logits, it's in tf losses. These models provide components that are useful, when building custom NN models. Why do I emphasize custom NN models? Because lots of the time, you don't need a custom neural network model, many times you are quite happy to go with a relatively standard way of training, evaluating, and serving models. You don't need to customize the way you train, you're going to use one of a family of gradient descent optimizer, and you're going to back propagator the weights, and you're going to do this iteratively. In that case, don't write a low level session loop. Just use an estimator. The estimator, is the high-level API in TensorFlow. It knows how to do this to be the training, it knows how to evaluate how to create a checkpoint, how to Save a model, how to set it up for serving. It comes with everything done in a sensible way, that fits most machine learning models and production. So, if you see example TensorFlow code on the Internet, and it doesn't use the estimator API, just ignore that code, walk away, it's not worth it. You'll have to write a whole bunch of code to do device placement and memory management and distribution, let the estimator do it for you. So those, are the TensorFlow levels of abstraction. Cloud ML engine is orthogonal to this hierarchy. Regardless of which abstraction level you're writing your tensorflow code at, CMLE gives you a managed service. It's hosted Tensorflow. So, that you can run TensorFlow on the cloud on a cluster of machines, without having to install any software or manage any servers.

Companies using Tensorflow:

Google, CEVA, Snapchat, SAP, Uber, Twitter, AirBnb, Ebay, Intel, DropBox, DeepMind, AirBus, MI and IBM.

Companies using Tensorflow:

Google, CEVA, Snapchat, SAP, Uber, Twitter, AirBnb, Ebay, Intel, DropBox, DeepMind, AirBus, MI and IBM.

Comments

Post a Comment